Improve PySpark Job Performance: Handling Skewed Data Like a Pro

A collaborative team of Data Engineers, Data Analysts, Data Scientists, AI researchers, and industry experts delivering concise insights and the latest trends in data and AI.

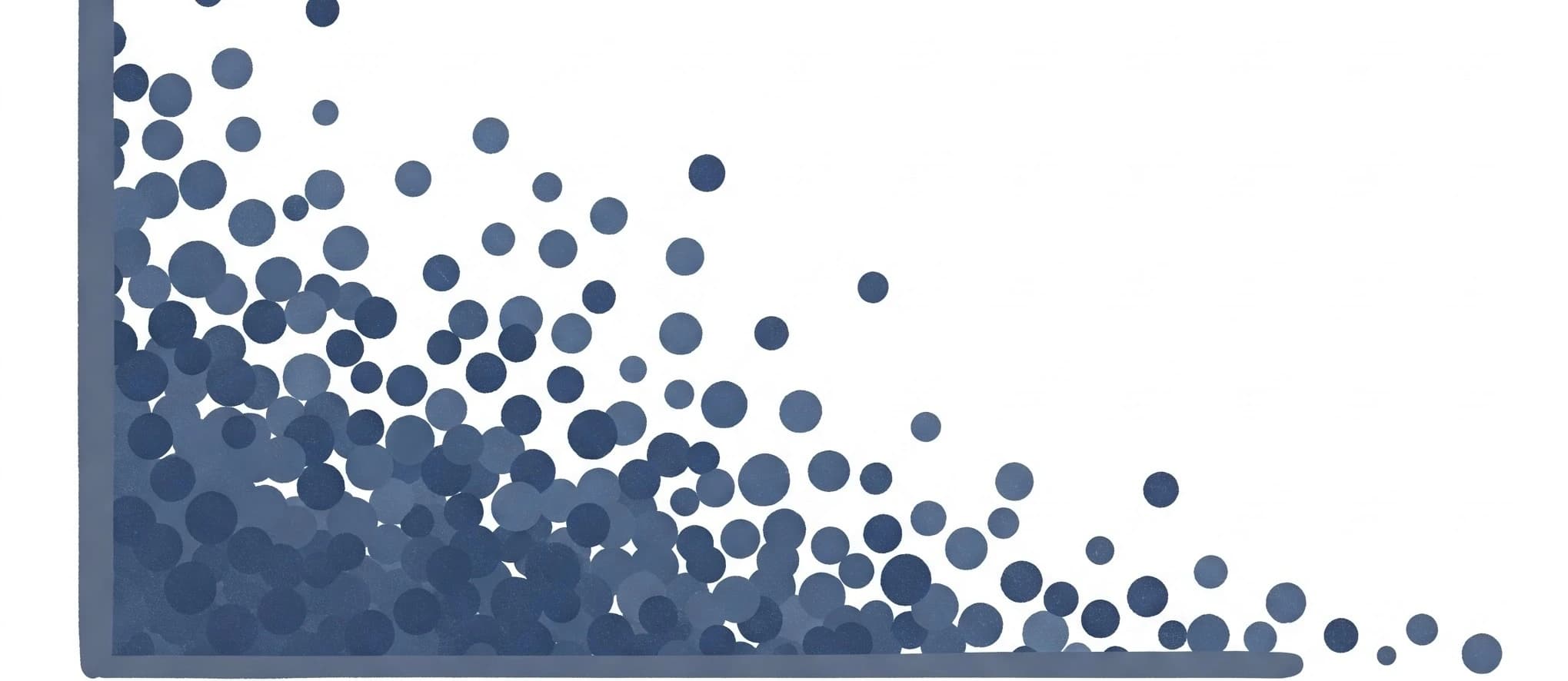

The Silent Killer of PySpark Performance

Data skew is a silent killer in distributed data processing. It’s the reason your Spark job takes hours instead of minutes, why resource utilization resembles a rollercoaster, and why that Friday deployment sometimes turns into a weekend firefight. In this guide, we’ll cut through the noise and offer actionable strategies for handling skewed data in PySpark—whether you’re just starting out or you’re a seasoned engineer. We’ll explain the concepts using relatable analogies, practical examples, and even some tables to help you grasp the nuances quickly.

What Is Data Skew?

Imagine you’re hosting a potluck dinner. You expect that each table will have roughly an equal number of guests so conversations can flow smoothly. But what if one table ends up overcrowded while the others are nearly empty? The crowded table becomes chaotic and overwhelmed, while the others are underutilized. This is similar to data skew in distributed computing: some partitions receive much more data than others, leading to imbalanced workloads.

Why Data Skew Impacts Performance

When data skew occurs, several issues arise:

- Straggler Tasks: Some tasks finish quickly while others, burdened with too much data, lag behind.

- Resource Wastage: Overloaded partitions drain CPU and memory, while other resources remain idle.

- Operational Challenges: Increased processing time can lead to memory spills, longer garbage collection, and even task failures.

Below is a summary table illustrating the key performance impacts of data skew:

| Impact | Explanation | Analogy |

|---|---|---|

| Straggler Tasks | Uneven data distribution causes some tasks to take much longer than others. | One crowded table vs. several empty ones. |

| Resource Wastage | Overloaded partitions hog CPU and memory while others sit idle. | A few tables having all the guests. |

| Operational Issues | Excessive processing time may lead to memory issues and task failures. | A dinner where one table disrupts the flow. |

Common Causes of Data Skew in PySpark

Data skew typically originates from several common sources. The following table summarizes these causes along with their typical remedies:

| Cause | Description | Potential Remedy |

|---|---|---|

| Highly Frequent Keys | Certain keys appear much more often (e.g., "unknown", "default"). | Salting keys, custom partitioning |

| Poor Partitioning Strategy | Inefficient distribution due to unsuitable partitioning logic. | Repartitioning based on key distribution |

| Skewed Join Operations | Joining two datasets where one contains skewed key values. | Broadcast joins, join hints |

| Aggregations on Skewed Data | GroupBy operations on skewed columns lead to imbalanced partitions. | Salting, repartitioning |

Strategies to Optimize Skewed Data in PySpark

Here are several effective strategies to mitigate data skew, each explained with an analogy and example:

Salting Keys

Analogy:

Think of salting keys as spreading out the guests from the overcrowded table to multiple smaller tables. You artificially diversify the seating (or key distribution) so that no single table gets too crowded.

How It Works:

- Append a random number (salt) to the skewed key to create a new, composite key.

- Use this composite key in join or aggregation operations.

- After processing, combine results to remove the salt.

Example Code:

from pyspark.sql.functions import col, concat, lit, floor, rand

# Assume df_large is a DataFrame with a skewed key 'user_id'

df_large_salted = df_large.withColumn("salt", floor(rand() * 10)) \

.withColumn("salted_user_id", concat(col("user_id"), lit("_"), col("salt")))Using Broadcast Joins

Analogy:

Imagine one table at the dinner is small and easy to move. Instead of trying to balance heavy tables, you simply send this small table to every other table so they all have the complete setup without needing to redistribute everything.

How It Works:

- Broadcast a small DataFrame so that every worker node has a copy.

- Join the broadcasted DataFrame with the large, skewed DataFrame without shuffling the heavy data.

Example Code:

from pyspark.sql.functions import broadcast

# Assume df_large is the skewed DataFrame and df_small is small enough to broadcast

result = df_large.join(broadcast(df_small), \

df_large["key"] == df_small["key"])Repartitioning Data

Analogy: Repartitioning is like rearranging the seating in a restaurant. Instead of having one table overloaded, you reorganize the tables so guests are spread evenly across the dining area.

How It Works:

- Use repartition() to increase the number of partitions based on a specific key.

- This helps to distribute the data more evenly across the cluster.

# Increase the number of partitions for better load distribution

balanced_df = df.repartition(100, "user_id")###Skewed Join Hints

Analogy: Imagine if the restaurant manager knew which tables would be crowded and adjusted the service accordingly. Skewed join hints allow Spark to apply special handling for known skewed keys.

How It Works:

Use Spark’s join hints to signal that a particular key is skewed. Spark then applies special optimizations to handle that key.

# Use a join hint to indicate that the 'user_id' key is skewed

result = df1.hint("skewed", "user_id").join(df2, "user_id")Example Code: Tackling Data Skew in PySpark

Below is a complete example that demonstrates how to use the salting keys technique to handle data skew during a join operation:

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, concat, lit, floor, rand, explode, array

# Initialize SparkSession

spark = SparkSession.builder \

.appName("SkewedDataOptimization") \

.getOrCreate()

# Sample data: skewed DataFrame and a small lookup DataFrame

data_large = [("user1", 100), ("user1", 200), ("user2", 150), ("user1", 50)]

columns = ["user_id", "transaction_value"]

df_large = spark.createDataFrame(data_large, columns)

data_small = [("user1", "Gold"), ("user2", "Silver")]

columns_small = ["user_id", "membership"]

df_small = spark.createDataFrame(data_small, columns_small)

# Salting the large DataFrame

df_large_salted = df_large.withColumn("salt", floor(rand() * 10)) \

.withColumn("salted_user_id", concat(col("user_id"), lit("_"), col("salt")))

# Generating all salt variations for the small DataFrame

salt_values = list(range(10))

df_small_salted = df_small.withColumn("salt", explode(array(*[lit(x) for x in salt_values]))) \

.withColumn("salted_user_id", concat(col("user_id"), lit("_"), col("salt")))

# Perform join on the salted keys

joined_df = df_large_salted.join(df_small_salted, on="salted_user_id", how="inner")

# Show results

joined_df.select("user_id", "transaction_value", "membership").show()

# Stop the SparkSession

spark.stop()In this example:

- Salting: We add a random salt to distribute skewed keys across partitions.

- Join Operation: The join is performed on the composite key (salted_user_id), ensuring a more balanced workload.

Nuances and Best Practices

| Aspect | Consideration | Recommendation |

|---|---|---|

| Salt Range | Too few salt values won’t alleviate skew; too many can add overhead. | Start with a range of 10 and tune as needed. |

| Broadcast Join | Only effective if the smaller DataFrame truly fits in memory. | Check the size of the DataFrame before broadcasting. |

| Repartitioning | Increases parallelism but may incur shuffling overhead. | Use repartition() judiciously, and monitor with Spark UI. |

| Evaluation of Solutions | Users’ code can vary widely, making evaluation challenging. | Implement hidden test cells or use tools like nbgrader for automated feedback. |

Tecyfy Takeaway

Data skew is a silent killer that can transform an efficient Spark job into a slow, resource-draining nightmare. Using techniques such as salting keys, broadcast joins, repartitioning, and join hints can significantly optimize performance and ensure smoother processing. Think of it as rearranging the seating at a crowded dinner—balancing the load makes everything run more smoothly.

By understanding the nuances, applying the right strategy, and continually monitoring performance, you can effectively tackle data skew in PySpark. Whether you’re a beginner or a seasoned engineer, these strategies and examples empower you to build faster, more efficient data pipelines.

Happy optimizing!